llm_generate_code

To get started:

Dynamically pull and run

from hamilton import dataflows, driver

# downloads into ~/.hamilton/dataflows and loads the module -- WARNING: ensure you know what code you're importing!

llm_generate_code = dataflows.import_module("llm_generate_code", "zilto")

dr = (

driver.Builder()

.with_config({}) # replace with configuration as appropriate

.with_modules(llm_generate_code)

.build()

)

# If you have sf-hamilton[visualization] installed, you can see the dataflow graph

# In a notebook this will show an image, else pass in arguments to save to a file

# dr.display_all_functions()

# Execute the dataflow, specifying what you want back. Will return a dictionary.

result = dr.execute(

[llm_generate_code.CHANGE_ME, ...], # this specifies what you want back

inputs={...} # pass in inputs as appropriate

)

Use published library version

pip install sf-hamilton-contrib --upgrade # make sure you have the latest

from hamilton import dataflows, driver

# Make sure you've done - `pip install sf-hamilton-contrib --upgrade`

from hamilton.contrib.user.zilto import llm_generate_code

dr = (

driver.Builder()

.with_config({}) # replace with configuration as appropriate

.with_modules(llm_generate_code)

.build()

)

# If you have sf-hamilton[visualization] installed, you can see the dataflow graph

# In a notebook this will show an image, else pass in arguments to save to a file

# dr.display_all_functions()

# Execute the dataflow, specifying what you want back. Will return a dictionary.

result = dr.execute(

[llm_generate_code.CHANGE_ME, ...], # this specifies what you want back

inputs={...} # pass in inputs as appropriate

)

Modify for your needs

Now if you want to modify the dataflow, you can copy it to a new folder (renaming is possible), and modify it there.

dataflows.copy(llm_generate_code, "path/to/save/to")

Purpose of this module

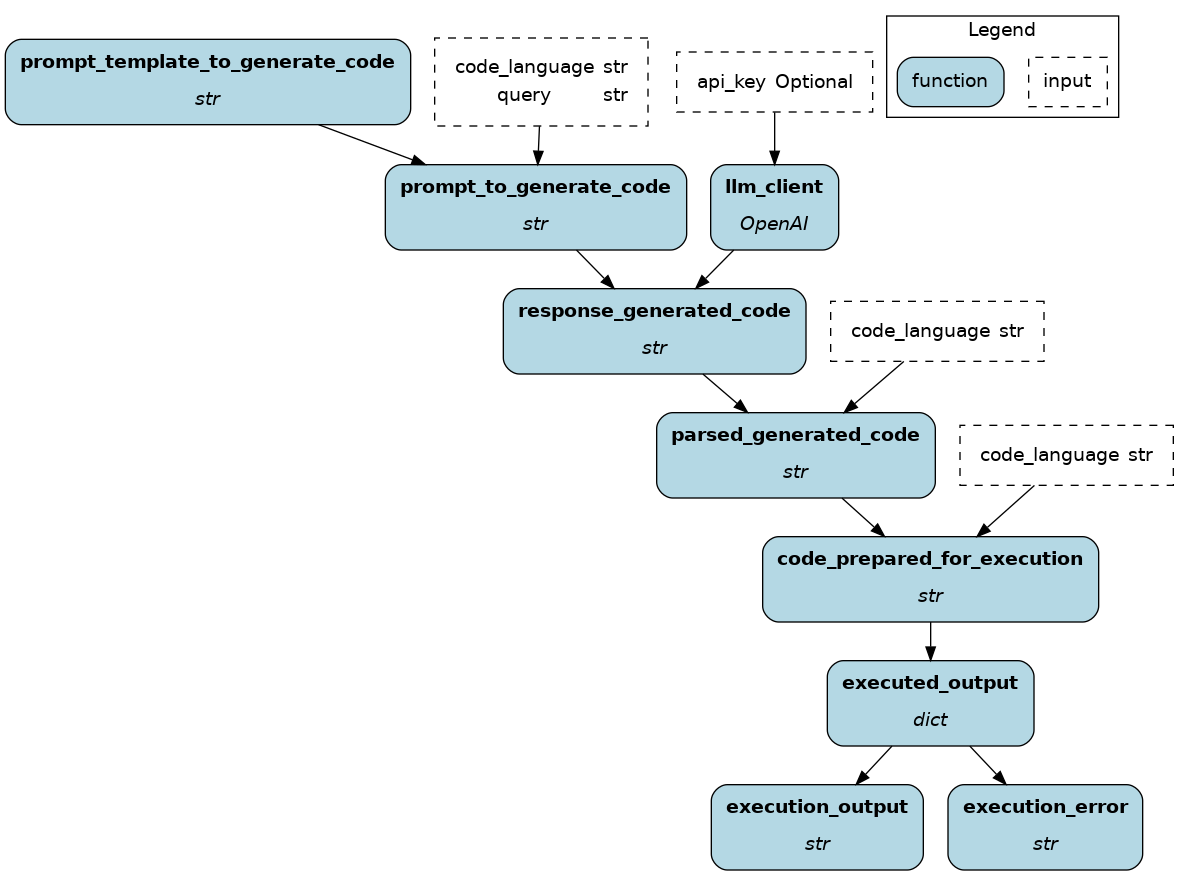

This module uses the OpenAI completion API to generate code.

For any language, you can request generated_code to get the generated response. If you are generating Python code, you can execute it in a subprocess by requesting execution_output and execution_error.

Example

from hamilton import driver

import __init__ as llm_generate_code

dr = driver.Builder().with_modules(llm_generate_code).build()

dr.execute(

["execution_output", "execution_error"],

inputs=dict(

query="Retrieve the primary type from a `typing.Annotated` object`",

)

)

Configuration Options

Config.when

This module doesn't receive configurations.

Inputs

query: The query for which you want code generated.api_key: Set the OpenAI API key to use. If None, read the environment variableOPENAI_API_KEYcode_language: Set the code language to generate the reponse in. Defaults topython

Overrides

prompt_template_to_generate_code: Create a new prompt template with the fieldsqueryandcode_language.prompt_to_generate_code: Manually provide a prompt to generate Python code

Extension / Limitations

- Executing arbitrary generated code is a security risk. Proceed with caution.

- You need to manually install dependencies for your generated code to be executed (i.e., you need to

pip install pandasyourself)

Source code

__init__.py

import logging

import os

import subprocess

from typing import Optional

from hamilton.function_modifiers import extract_fields

logger = logging.getLogger(__name__)

from hamilton import contrib

with contrib.catch_import_errors(__name__, __file__, logger):

import openai

def llm_client(api_key: Optional[str] = None) -> openai.OpenAI:

"""Create an OpenAI client."""

if api_key is None:

api_key = os.environ.get("OPENAI_API_KEY")

return openai.OpenAI(api_key=api_key)

def prompt_template_to_generate_code() -> str:

"""Prompt template to generate code.

It must include the fields `code_language` and `query`.

"""

return """Write some {code_language} code to solve the user's problem.

Return only python code in Markdown format, e.g.:

```{code_language}

....

```

user problem

{query}

{code_language} code

"""

def prompt_to_generate_code(

prompt_template_to_generate_code: str, query: str, code_language: str = "python"

) -> str:

"""Fill the prompt template with the code language and the user query."""

return prompt_template_to_generate_code.format(

query=query,

code_language=code_language,

)

def response_generated_code(llm_client: openai.OpenAI, prompt_to_generate_code: str) -> str:

"""Call the OpenAI API completion endpoint with the prompt to generate code."""

response = llm_client.completions.create(

model="gpt-3.5-turbo-instruct",

prompt=prompt_to_generate_code,

)

return response.choices[0].text

def parsed_generated_code(response_generated_code: str, code_language: str = "python") -> str:

"""Retrieve the code section from the generated text."""

_, _, lower_part = response_generated_code.partition(f"```{code_language}")

code_part, _, _ = lower_part.partition("```")

return code_part

def code_prepared_for_execution(parsed_generated_code: str, code_language: str = "python") -> str:

"""If code is Python, append to it statements prepare it to be run in a subprocess.

We collect all local variables in a directory and filter out Python builtins to keep

only the variables from the generated code. print() is used to send string data from

the subprocess back to the parent proceess via its `stdout`.

"""

if code_language != "python":

raise ValueError("Can only execute the generated code if `code_language` = 'python'")

code_to_get_vars = (

"excluded_vars = { 'excluded_vars', '__builtins__', '__annotations__'} | set(dir(__builtins__))\n"

"local_vars = {k:v for k,v in locals().items() if k not in excluded_vars}\n"

"print(local_vars)"

)

return parsed_generated_code + code_to_get_vars

@extract_fields(

dict(

execution_output=str,

execution_error=str,

)

)

def executed_output(code_prepared_for_execution: str) -> dict:

"""Execute the generated Python code + appended utilities in a subprocess.

The output and errors from the code are collected as strings. Executing

the code in a subprocess provides isolation, but isn't a security guarantee.

"""

process = subprocess.Popen(

["python", "-c", code_prepared_for_execution],

stdout=subprocess.PIPE,

stderr=subprocess.PIPE,

universal_newlines=True,

)

output, errors = process.communicate()

return dict(execution_output=output, execution_error=errors)

# run as a script to test dataflow

if __name__ == "__main__":

import __init__ as llm_generate_code

from hamilton import driver

dr = driver.Builder().with_modules(llm_generate_code).build()

dr.display_all_functions("dag.png", orient="TB")

res = dr.execute(

["execution_output", "execution_error"],

overrides=dict(generated_code="s = 'hello world'"),

)

print(res)

Requirements

openai